An introduction to Hadoop

- April 29, 2017

- Posted by: Shachi Marathe

- Category: Big Data , Information Management ,

Big data is topic that is ever evolving. Data cannot be of any use if it is not stored and analyzed properly. Hadoop is a technology by Apache that helps in making large volumes of Data useful. So, Hadoop is an important technology to be looked at when one wants to know more about Big Data.

This article will help you know more about Hadoop and what is actually is. The article will delve a bit into the history and different versions of Hadoop. For the un-initiated, it will also look at high level architecture of Hadoop and its different modules.

Shachi Marathe introduces you to the concept of Hadoop for Big Data. The article touches on the basic concepts of Hadoop, its history, advantages and uses. The article will also delve into architecture and components of Hadoop.

Key Concepts

Big data is topic that is ever evolving. Data cannot be of any use if it is not stored and analyzed properly. Hadoop is a technology by Apache that helps in making large volumes of Data useful. So, Hadoop is an important technology to be looked at when one wants to know more about Big Data.

Subscribe Now

By reading this article you will learn:

- What is Hadoop?

- History and versions of Hadoop

- Advantages of using Hadoop

- Applications where Hadoop can be used

- Architecture / Structure

- Cloud based systems

- Distributed file systems (HDFS)

- Map Reduce

- YARN

- Brief on additional components

What is Hadoop?

Hadoop is an open source framework, which enables storage of large data-sets and processing of these large data-sets, by using a clustered environment.

The current version is Hadoop 3.0 and it is developed by a group of developers and contributors, under the Apache Software Foundation.

The Hadoop framework, enables the handling and processing of thousands of terabytes of data, by making it possible to run and use applications on multiple community nodes. Hadoop has a distributed file system that ensures that if there is a failure of a node, it does not get the whole system down.

This robust nature of the Hadoop framework has played a key role in processing of Big Data and its usage by organizations that use large volumes of data for their business.

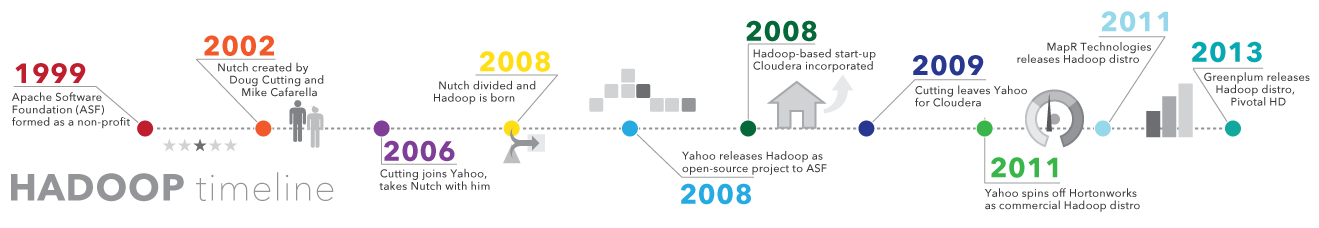

A little bit of History

At the end of the 20th century, Internet usage started picking up worldwide. This led to a lot of data being generated, shared and used across the world. Most of the data was text-based and so, basic search engines with indexes on data were created to make data accessibility easier. But as data grew in size, simple manual indexing and search started failing, giving way to web crawlers and search-engine companies.

Nutch, was an open-source search engine over the web, nurtured by Doug Cutting and Mike Cafarella. This search engine distributed the data and calculations across multiple different computers, thus ensuring that multiple tasks were performed simultaneously, thus ensuring faster results. This was the first stint related to automated distributed data and processing.

Around 2006, the Nutch project was divided into a web crawler component and a processing component. The distributed computing and processing component was renamed as Hadoop (which was in fact the name of Doug Cutting’s toddler’s toy elephant).

In 2008, Hadoop was released as an open-source project by Yahoo. Over the years, Hadoop has evolved and is currently its framework and technology modules are being maintained by the Apache Software Foundation, which is a global community of software enthusiasts, both, developers and contributors.

Hadoop 1.0 became available to the public in November 2012. The framework was based on Google’s MapReduce, in which an application is split into multiple smaller parts, called blocks or fragments and these parts can then be run on any node in a cluster environment (group of resources, including servers, which ensure high availability, parallel processing and load balancing).

Hadoop 2.0, released in 2013 and focused on better resource management and scheduling.

In January 2017, Hadoop 3.0 was released with some more enhancements.

Advantages of Using Hadoop

Advantages of Using Hadoop

Some very basic advantages of Hadoop are –

- Hadoop is a low-cost framework that can be used either as an in-house deployment or used over the Cloud.

- Hadoop provides a high-performance framework, which is highly reliable, scalable and has massive data management capabilities. All these form the back-bone of an effective application.

- Hadoop can work on and process large volumes of data, which is volatile as well.

- Hadoop works effectively and efficiently on structured as well as unstructured data.

Systems where Hadoop can be used

Hadoop can be used across multiple applications and systems based on an Organization’s requirements. Here is a list of some such applications

- Web Analytics which help companies study visitors to their websites and their activities on the site. This can then be helped to improve marketing strategies.

- Operational Intelligence – where, data is captured and studied to find lacuna in processes and improve on the same.

- Risk Management and Security Management – Data trends can be collected and studied to check on patterns of transactions and fraudulent activities.

- Geo-spatial applications – Data from satellites can be gathered and studied. This data is usually voluminous and co-relational study is paramount.

There are many other applications the can use Hadoop implementations to optimize marketing, etc.

The Hadoop Ecosytem

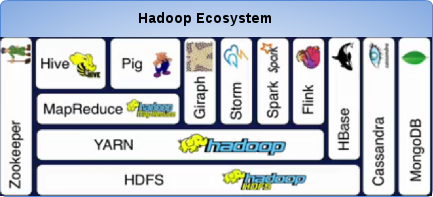

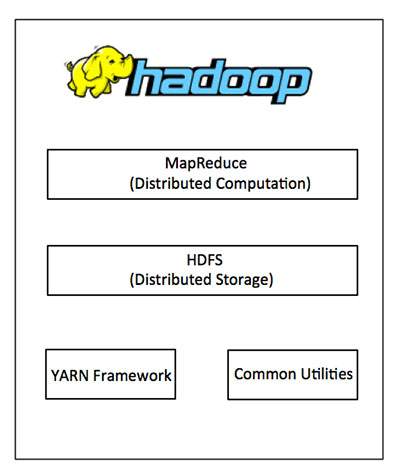

The main components of the Hadoop ecosystem are

The main components of the Hadoop ecosystem are

- Hadoop Common – As the name suggests, this component contains the common libraries and utilities that are needed by other Hadoop modules and components.

- Hadoop Distributed File System – HDFS – This is Hadoop’s distributed file system that stores data and files in blocks across many nodes in a cluster.

- Hadoop YARN – A Yarn, as we know it is a thread that can be used to tie things together. Similarly, the Hadoop YARN manages resources with appropriate scheduling, in the cluster.

- Hadoop MapReduce – Hadoop MapReduce enables a programmer to work within a framework and build programs for large scale data processing

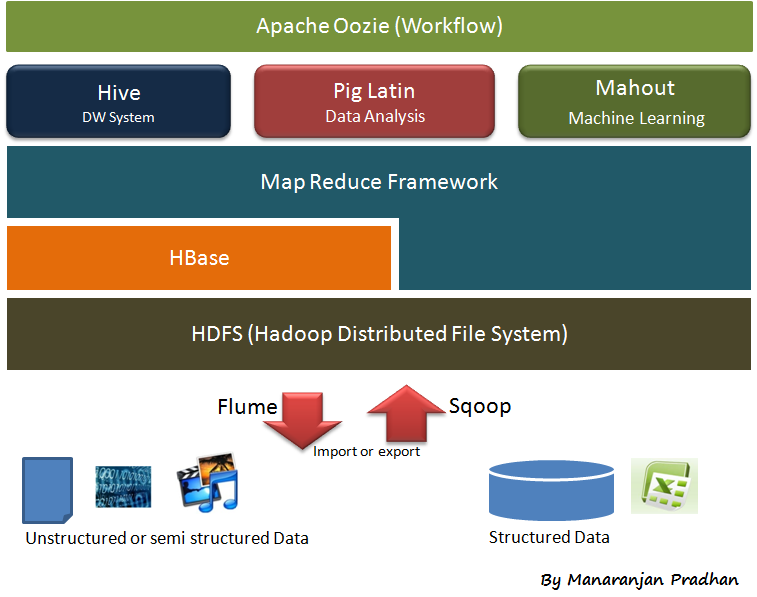

Over and above the components and modules stated above, the Hadoop platform comes with additional related applications or modules. These are Apache Oozie, Apache Hive, Apache HBase, Mahout, Apache Pig, etc.

Figure # 3: Hadoop Ecosystem details

Figure # 3: Hadoop Ecosystem details

Hadoop modules are designed and implemented such that, in a cluster, even if there are hardware failures, the framework will handle it and ensure there is no stoppage of service. The framework is written mostly in Java and some in C and shell scripts. This makes it easier to adopt and implement based in your requirements.

What is a cluster?

A cluster means a group. When multiple, independent servers are grouped to work together, to ensure high availability, scalability and reliability, they form a server cluster. Applications based on server clusters ensure that users have uninterrupted access to important resources running on the server, thus improving working efficiency.

If one machine in this cluster fails, the workload is distributed across the other servers and this enables uninterrupted services to clients.

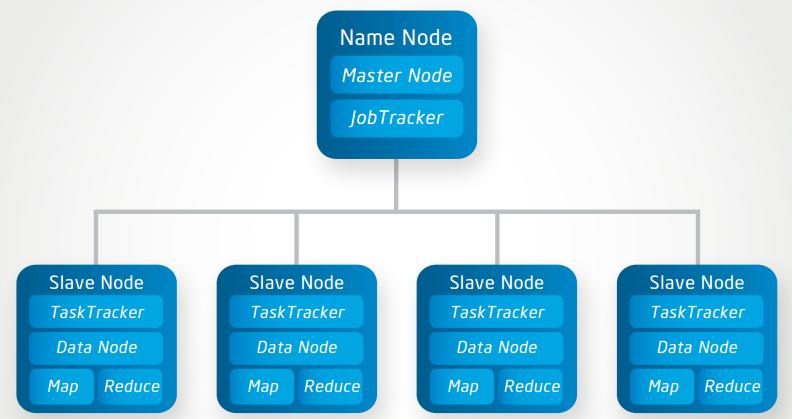

What is a Hadoop Cluster?

A Hadoop cluster, as mentioned above is a cluster of servers and machines, which is designed to handle large volumes of structured as well as unstructured data in a distributed environment. Typically, there will be a NameNode and a JobTracker, and these are the masters who control the slave machines, called the DataNode and the TaskTracker.

Figure # 4: Hadoop Cluster

Figure # 4: Hadoop Cluster

As we already know, Big Data deals with large volumes of data which is volatile and unstructured and the results need to be processed in real-time. This requires higher processing power, multi-tasking, scalability, reliability, which means no failure conditions. Hadoop cluster ensures all this by providing the ability to add more cluster nodes, and data is duplicated over nodes to ensure zero loss of data in case of failures.

What is a Distributed File System?

In a distributed file system (DFS), data is stored on a server. These data and files can then be accessed by multiple users from multiple locations. Access to the data can be controlled over the network. The user gets a feeling of working on local machines, but is actually working on the shared server.

What is HDFS?

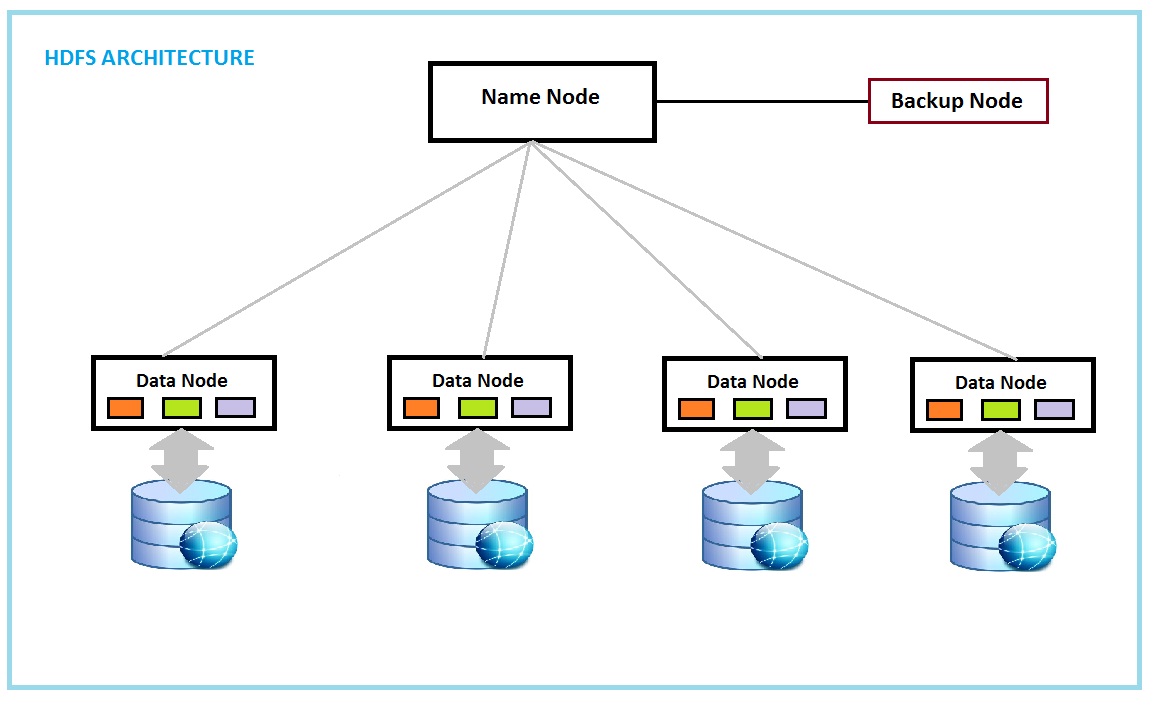

The Hadoop Distributed File System (HDFS) uses the Hadoop Cluster to ensure a master / slave architecture. HDFS is scalable and portable and written in Java, specially for the Hadoop Framework. Typically, each main node is called a NameNode and it controls a cluster of DataNodes. Each NameNode need not have a DataNode, but a DataNode cannot exist without a NameNode.

So, in simpler terms, large files of data are stored across multiple machines. Data us replicated across multiple DataNodes, which then work together to ensure integrity of data. But this works best when Hadoop Distributed File System is used with its own modules as this gives maximum utilization. Else, the effectiveness may not be optimal.

Figure # 5: HDFS

Figure # 5: HDFS

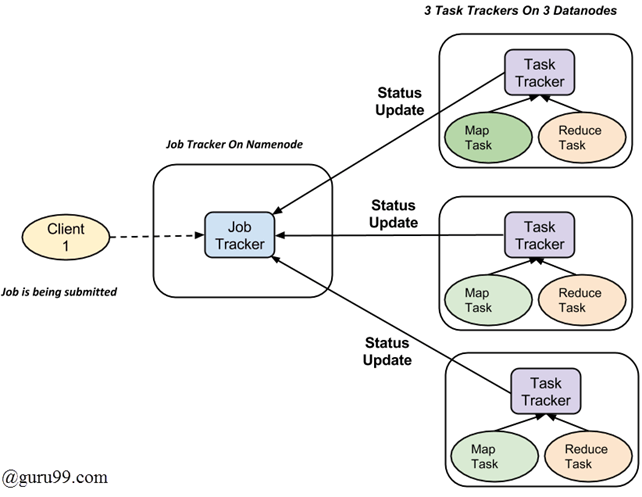

What is MapReduce?

MapReduce is the framework that enables a user to code applications to process data.

MapReduce works in a Job-Task system. So, for each MapReduce Job, multiple tasks are created and managed. These are split such that they can be performed in parallel.

The Hadoop framework is implemented in Java, but MapReduce applications need not be written in Java.

Earlier MapReduce managed resources in the cluster as well as Data Processing, but now there is one more layer between MapReduce and HDFS and its called YARN. YARN does the resource management, leaving data processing to the MapReduce component.

Figure # 6: MapReduce

Figure # 6: MapReduce

What is YARN?

YARN stands for Yet Another Resource Negotiator. The YARN layer was introduced in Hadoop 2.0, as an intermediate layer to manage resources within the cluster. It works like a large-scale distributed operating system.

YARN manages the applications running on Hadoop, such that, multiple applications can run in parallel with effective resource sharing, which was not always feasible in MapReduce. This makes it more suitable for Hadoop to run more interactive applications and operations, instead of functioning in a batch processing scenario. This has also done away with the Job Tracker and Task tracker scenario and introduced two daemons, the Resource Manager and the Node Manager.

Other Hadoop Components

Other than HDFS, MapReduce and YARN, there are multiple components that Hadoop offers that can be used –

- Apache Flume – Is a tool that can be used to collect, move and aggregate large amounts of data into the Hadoop Distributed File System.

- Apache HBase – As the name suggests, HBase is an open source, non-relational, distributed database which is part of Apache Hadoop.

- Apache Hive – Apache Hive is like a data warehouse. Hive thus provides, summarization, query and analysis of data.

- Cloudera Impala – This component was originally developed by Cloudera, a software company. It is a parallel processing database that is used with Hadoop.

- Apache Oozie – is a workflow scheduling system, which is server based and is primarily used to manage jobs in Hadoop.

- Apache Phoenix – is based on Apache HBase. It is also an open source tool, that serves as a parallel processing, relational database engine.

- Apache Pig – Programs can be created on Hadoop, using Apache Pig.

- Apache Spark – Apache Spark is a fast engine. It is used for processing Big Data, especially when it comes to processing of graphs and machine learning.

- Apache Sqoop – is used to scoop and transfer large volumes of data between Hadoop and other data stores that are structured. These data transfers can also be to relational databases.

- Apache ZooKeeper – The ZooKeeper is also available as an open source service used for configuration and synchronization of large distributed systems.

- Apache Storm – Apache Storm is an open source data processing system.

Conclusion

Hadoop is a very useful open-source framework that can be used by Organizations implementing Big Data techniques for their business. Hadoop was earlier traditionally used by organizations as in-house installation, but now, considering the rising costs of infrastructure and management requirements, Organizations are shifting towards Hadoop over the Cloud.

References

- https://www.sas.com/en_us/insights/big-data/hadoop.html

- http://searchcloudcomputing.techtarget.com/definition/Hadoop

- https://en.wikipedia.org/wiki/Apache_Hadoop

- http://searchcloudcomputing.techtarget.com/definition/Hadoop

- http://searchdatamanagement.techtarget.com/feature/How-a-Hadoop-distribution-can-help-you-manage-big-data

- https://opensource.com/life/14/8/intro-apache-hadoop-big-data

- https://www.sas.com/en_us/insights/big-data/hadoop.html

- http://searchbusinessanalytics.techtarget.com/definition/Hadoop-cluster

- https://technet.microsoft.com/en-in/library/cc785197(v=ws.10).aspx

- http://searchwindowsserver.techtarget.com/definition/distributed-file-system-DFS

Subscribe now

Subscribe now and get weekly free news letters and access to many free articles, researches and white papers. You can subscribe by creating your account or by entering your e-mail address in the following subscribe text box.

Author: Shachi Marathe

3 Comments

Comments are closed.

very useful article.

Very happy to hear that Suman. Shach is one of our top class authors. Please let me know what other type of articles that you are interested to read about.

very helpful and nice article of Hadoop